Bayesian Linear Regression

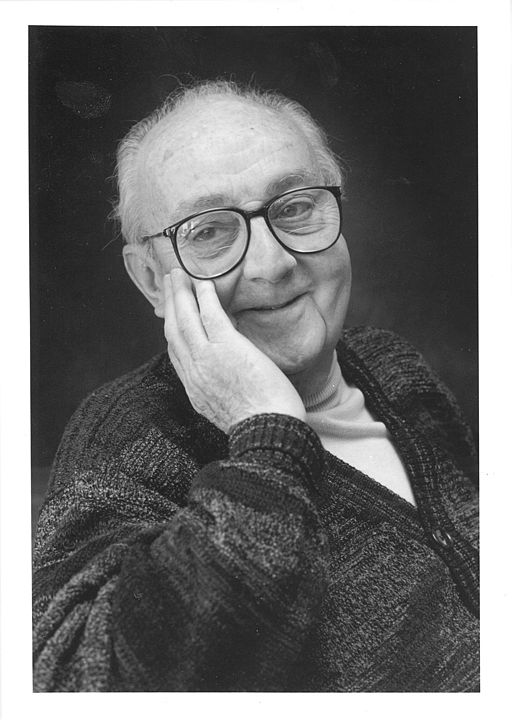

"All models are wrong but some are useful"

George Box (Box, 1976)

This tutorial begins with a very provocative quote from the statistician George Box (figure below) on statistical models. Yes, all models are somehow wrong. But they are very useful. The idea is that the reality is too complex for us to understand when analyzing it in a naked and raw way. We need to somehow simplify it into individual components and analyze their relationships. But there is a danger here: any simplification of reality promotes loss of information in some way. Therefore, we always have a delicate balance between simplifications of reality through models and the inherent loss of information. Now you ask me: "how are they useful?" Imagine that you are in total darkness and you have a very powerful flashlight but with a narrow beam. Are you going to throw the flashlight away because it can't light everything around you and stay in the dark? You must use the flashlight to aim at interesting places in the darkness in order to illuminate them. You will never find a flashlight that illuminates everything with the clarity you need to analyze all the fine details of reality. Just as you will never find a unique model that will explain the whole reality around you. You need different flashlights just like you need different models. Without them you will be in total darkness.

Linear Regression

Let's talk about a class of model known as linear regression. The idea here is to model a continuous dependent variable with a linear combination of independent variables.

where:

– dependent variable

– intercept

– coefficient vector

– data matrix

– model error

To estimate the coefficients we use a Gaussian/normal likelihood function. Mathematically the Bayesian regression model is:

Here we see that the likelihood function is a normal distribution in which depends on the parameters of the model and , in addition to having an error . We condition onto the observed data by inserting as the linear predictor of the model (the mean parameter of the model's Normal likelihood function, and is the variance parameter). What remains is to specify which are the priors of the model parameters:

Prior Distribution of – Knowledge we possess regarding the model's intercept.

Prior Distribution of – Knowledge we possess regarding the model's independent variables' coefficients.

Prior Distribution of – Knowledge we possess regarding the model's error. Important that the error can only be positive. In addition, it is intuitive to place a distribution that gives greater weight to values close to zero, but that also allows values that are far from zero, so a distribution with a long tail is welcome. Candidate distributions are which is only supported on positive real numbers (so it solves the question of negative errors) or truncated to only positive numbers (remembering that the distribution Cauchy is Student's with degrees of freedom ).

Our goal is to instantiate a linear regression with the observed data ( and ) and find the posterior distribution of our model's parameters of interest ( and ). This means to find the full posterior distribution of:

This is easily accomplished with Turing:

using Turing

using LinearAlgebra: I

using Statistics: mean, std

using Random: seed!

seed!(123)

@model function linreg(X, y; predictors=size(X, 2))

#priors

α ~ Normal(mean(y), 2.5 * std(y))

β ~ filldist(TDist(3), predictors)

σ ~ Exponential(1)

#likelihood

return y ~ MvNormal(α .+ X * β, σ^2 * I)

end;Here I am specifying very weakly informative priors:

– This means a normal distribution centered on

y's mean with a standard deviation 2.5 times the standard deviation ofy. That prior should with ease cover all possible values of . Remember that the normal distribution has support over all the real number line .– The predictors all have a prior distribution of a Student- distribution centered on 0 with variance 1 and degrees of freedom . That wide-tailed distribution will cover all possible values for our coefficients. Remember the Student- also has support over all the real number line . Also the

filldist()is a nice Turing's function which takes any univariate or multivariate distribution and returns another distribution that repeats the input distribution.– A wide-tailed-positive-only distribution perfectly suited for our model's error.

Also, we are using the MvNormal construction where we specify both a vector of means (first positional argument) and a covariance matrix (second positional argument). Regarding the covariance matrix σ^2 * I, it uses the model's errors σ, here parameterized as a standard deviation, squares it to produce a variance paramaterization, and multiplies by I, which is Julia's LinearAlgebra standard module implementation to represent an identity matrix of any size.

Example - Children's IQ Score

For our example, I will use a famous dataset called kidiq (Gelman & Hill, 2007), which is data from a survey of adult American women and their respective children. Dated from 2007, it has 434 observations and 4 variables:

kid_score: child's IQmom_hs: binary/dummy (0 or 1) if the child's mother has a high school diplomamom_iq: mother's IQmom_age: mother's age

Ok let's read our data with CSV.jl and output into a DataFrame from DataFrames.jl:

using DataFrames

using CSV

using HTTP

url = "https://raw.githubusercontent.com/storopoli/Bayesian-Julia/master/datasets/kidiq.csv"

kidiq = CSV.read(HTTP.get(url).body, DataFrame)

describe(kidiq)4×7 DataFrame

Row │ variable mean min median max nmissing eltype

│ Symbol Float64 Real Float64 Real Int64 DataType

─────┼──────────────────────────────────────────────────────────────────────

1 │ kid_score 86.7972 20 90.0 144 0 Int64

2 │ mom_hs 0.785714 0 1.0 1 0 Int64

3 │ mom_iq 100.0 71.0374 97.9153 138.893 0 Float64

4 │ mom_age 22.7857 17 23.0 29 0 Int64As you can see from the describe() output, the mean children's IQ is around 87 while the mother's is 100. Also the mother's range from 17 to 29 years with mean of around 23 years old. Finally, note that 79% of mothers have a high school diploma.

Now let's us instantiate our model with the data:

X = Matrix(select(kidiq, Not(:kid_score)))

y = kidiq[:, :kid_score]

model = linreg(X, y);And, finally, we will sample from the Turing model. We will be using the default NUTS() sampler with 1_000 samples, but now we will sample from 4 Markov chains using multiple threads MCMCThreads():

chain = sample(model, NUTS(), MCMCThreads(), 1_000, 4)

summarystats(chain)Summary Statistics

parameters mean std mcse ess_bulk ess_tail rhat ess_per_sec

Symbol Float64 Float64 Float64 Float64 Float64 Float64 Float64

α 21.5126 8.6720 0.2217 1528.8886 1868.2612 1.0009 24.7261

β[1] 2.0014 1.7943 0.0419 2281.1940 1734.6512 1.0016 36.8928

β[2] 0.5788 0.0584 0.0013 2163.9754 2292.8814 1.0006 34.9971

β[3] 0.2566 0.3092 0.0074 1762.0214 2135.6795 1.0010 28.4965

σ 17.8859 0.6033 0.0106 3271.1669 2347.2435 1.0008 52.9033

We had no problem with the Markov chains as all the rhat are well below 1.01 (or above 0.99). Our model has an error σ of around 18. So it estimates IQ±9. The intercept α is the basal child's IQ. So each child has 22±9 IQ before we add the coefficients multiplied by the child's independent variables. And from our coefficients , we can see that the quantile() tells us the uncertainty around their estimates:

quantile(chain)Quantiles

parameters 2.5% 25.0% 50.0% 75.0% 97.5%

Symbol Float64 Float64 Float64 Float64 Float64

α 4.7278 15.7633 21.2942 27.4322 38.4426

β[1] -0.5876 0.7324 1.6761 2.9919 6.3388

β[2] 0.4662 0.5392 0.5793 0.6184 0.6924

β[3] -0.3477 0.0440 0.2588 0.4733 0.8490

σ 16.7525 17.4685 17.8796 18.2703 19.1238

β[1]– first column ofX,mom_hs, has 95% credible interval that is all over the place, including zero. So its effect on child's IQ is inconclusive.β[2]– second column ofX,mom_iq, has a 95% credible interval from 0.46 to 0.69. So we expect that every increase in the mother's IQ is associated with a 0.46 to 0.69 increase in the child's IQ.β[3]– third column ofX,mom_age, has also 95% credible interval that is all over the place, including zero. Likemom_hs, its effect on child's IQ is inconclusive.

That's how you interpret 95% credible intervals from a quantile() output of a linear regression Chains object.

References

Box, G. E. P. (1976). Science and Statistics. Journal of the American Statistical Association, 71(356), 791–799. https://doi.org/10.2307/2286841

Gelman, A., & Hill, J. (2007). Data analysis using regression and multilevel/hierarchical models. Cambridge university press.